Spark on YARN Deployment Modes

YARN Client mode

- ① YARN Client向ResourceManager提交申請

- ② ResourceManager接收到請求後,在集群中選擇一個NodeManager分配Container,並在Container中啟動ApplicationMaster process

- Dirver process在YARN client上運行,並初始化Spark Context

- ③ Spark Context初始完後,與ApplicationMaster進行溝通,透過ApplicationMaster向ResourceManager申請Container,ResourceManager收到請求後,在集群中選擇一個NodeManager分配Container

- ④ ApplicationMaster通知NodeManager在Container中啟動Spark executor

- ⑤ Spark executor向Driver註冊,並在之後將自身狀態回報給Driver

- ⑥ Spark Context將Task分配給Spark executor

- ⑦ 所有Task執行結束,YARN Client向ResourceManager提交註銷ApplicationMaster

YARN Cluster mode

- ② ApplicationMaster process會執行Driver,並初始化Spark Context,Spark Context會運行在與ApplicationMaster相同的集群節點上

- ⑦ Spark executor向註冊ApplicationMaster,並在之後將自身狀態回報給ApplicationMaster

YARN Cluster vs Client

- Spark driver:

- YARN Client: 運行在提交Application本地端

- YARN Cluster: 運行在與ApplicationMaster相同的集群節點上

- 無論是哪種模式,Driver都要跟NodeManager進行通信,故盡量讓Driver與NodeManager在相同的集群內,可以有效降低網路傳輸

- ApplicationMaster:

- YARN Client: 僅負責申請資源,由Spark driver監控Task的運行,所以Client在整個Application生命週期中都不能退出

- YARN Cluster: 不僅負責申請資源,並負責監控Task的運行狀況,因此Client可以退出

- Spark interactively:

- 交互式的Spark application不能運行在Cluster mode上,例如spark-shell與pyspark

- Client network:

- 盡可能讓Client與ResourceManager與NodeManager在同一個集群內,有效降低網路傳輸

- Client loading:

- YARN Client: 會佔用提交機器的資源,需特別注意資源是否足夠

World Count on YARN Deployment Modes

YARN Client mode

|

|

Jps Infomation

|

|

- CoarseGrainedExecutorBackend

- 分別對應為2個Container process

- executor-id指出自身的id

- app-id指出對應的Application id

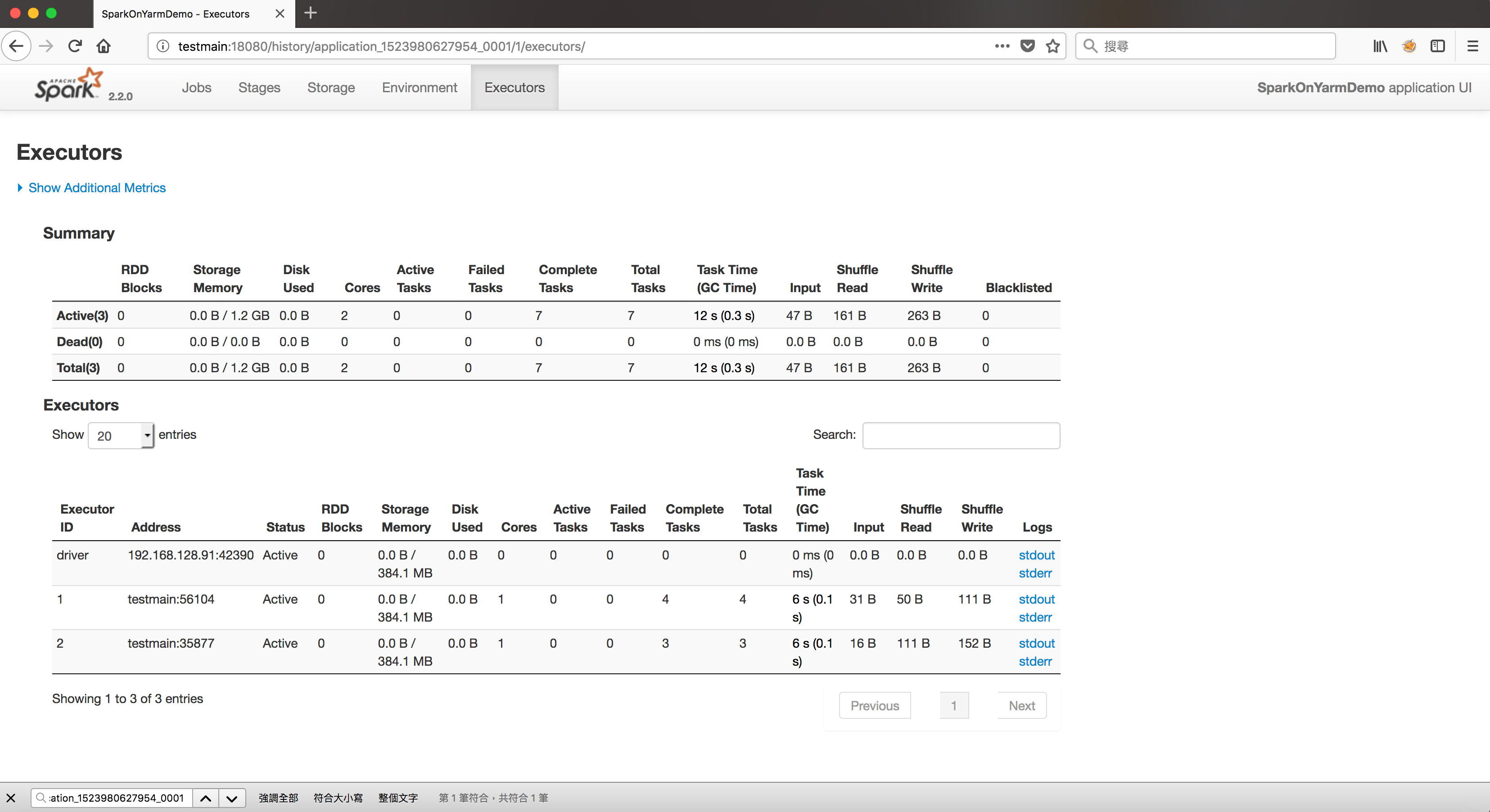

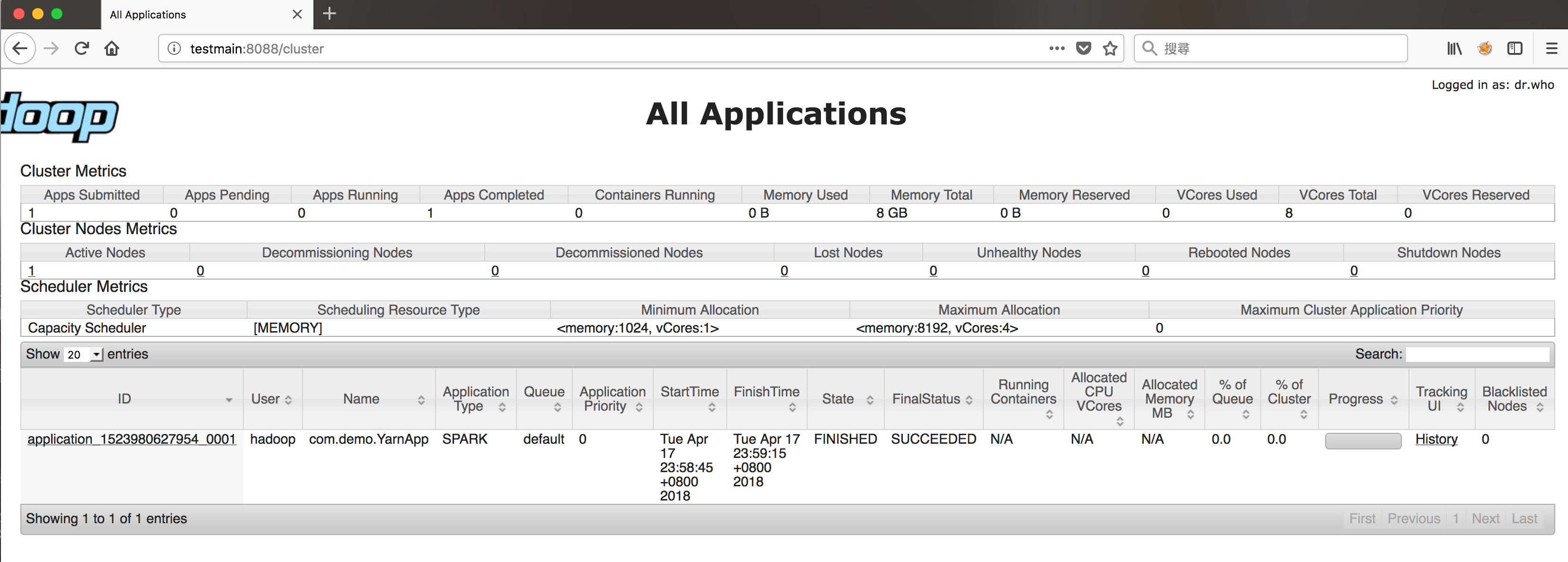

YARN Web Infomation

History Infomation

YARN Cluster mode

- 如何上傳Spark application至YARN cluster,請參考

|

|

Check Application output

|

|

Jps Infomation

|

|

- 相較Client模式,Cluster模式多出ApplicationMaster process

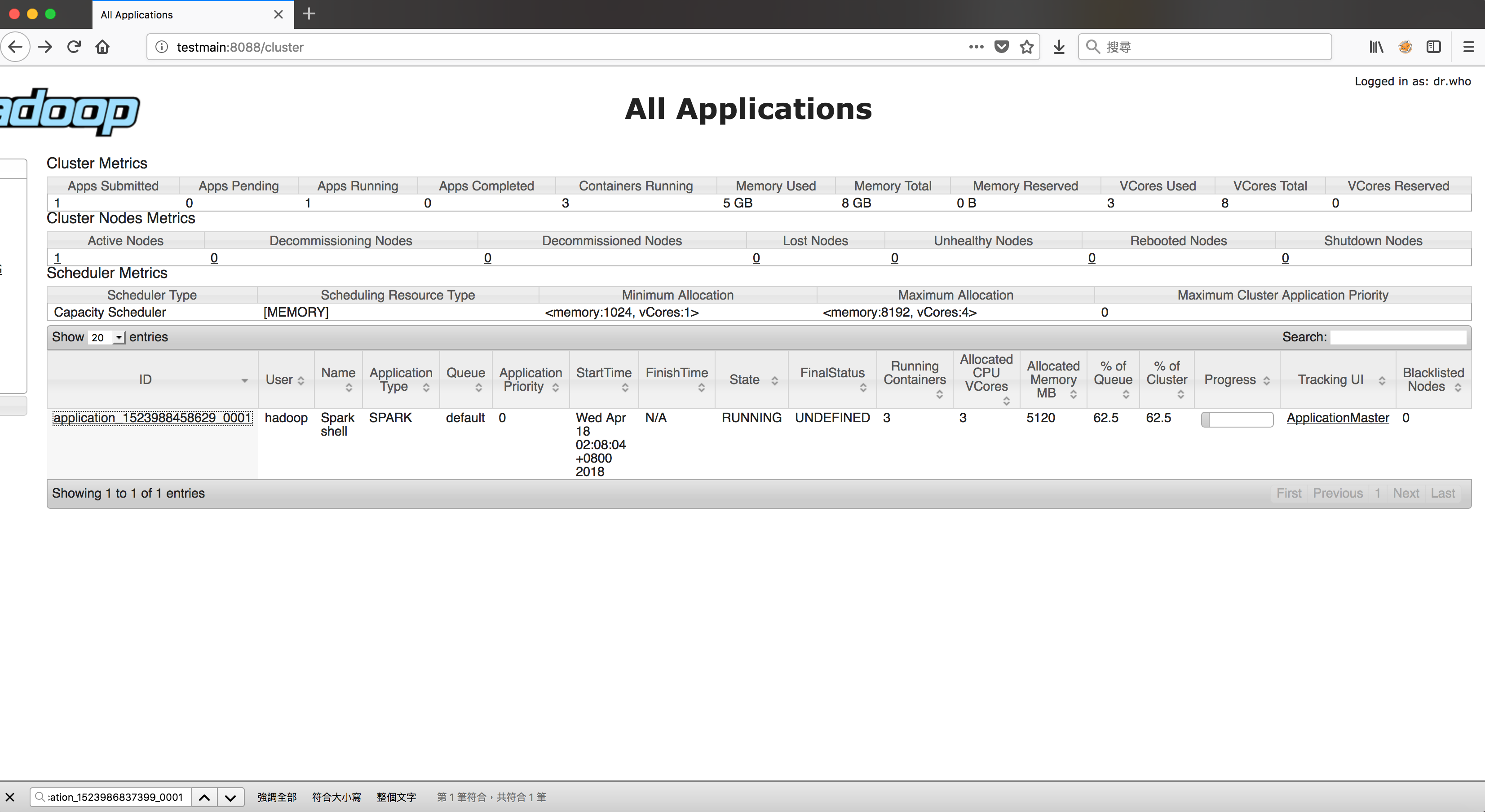

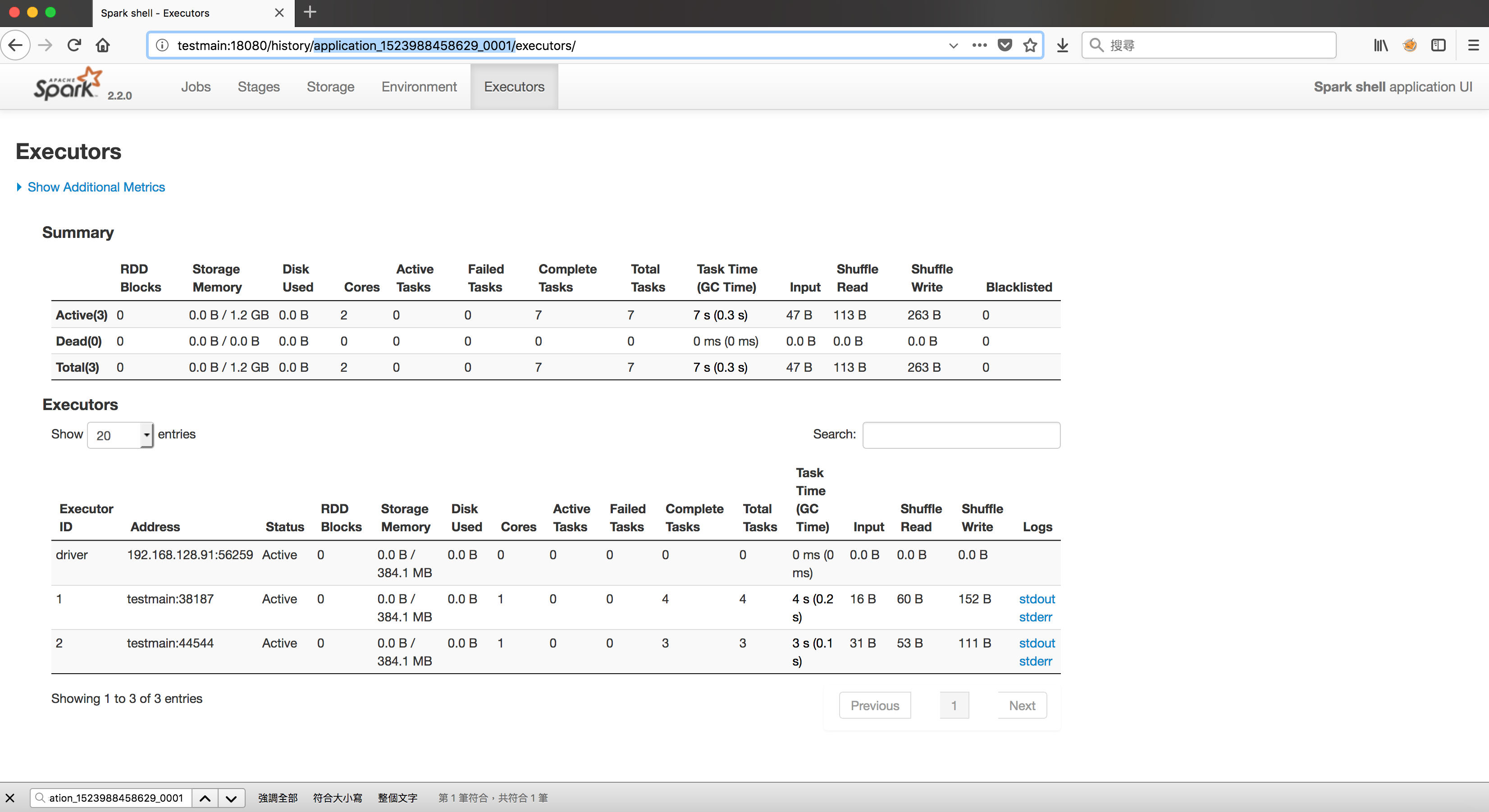

YARN Web Infomation

History Infomation