Launching Spark Application on Yarn Cluster

New Scala Project

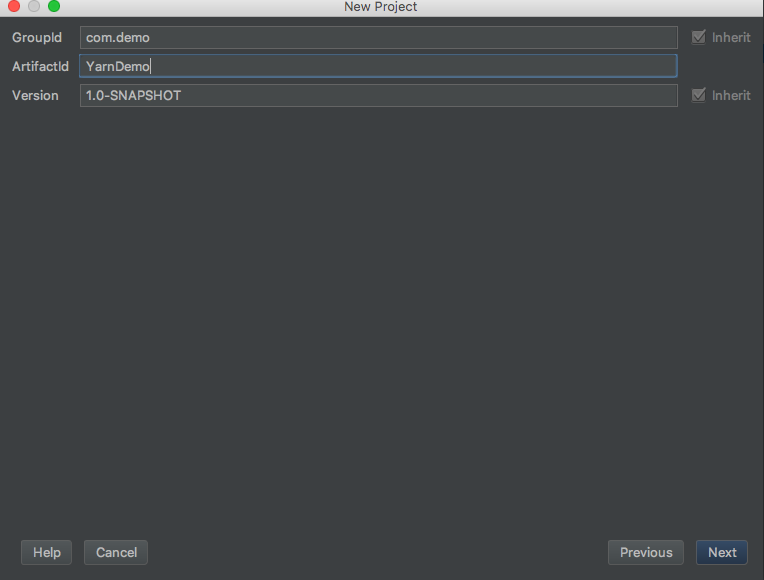

使用IDEA依序建立Scala project

此後的設定使用預設即可,點擊Next直到專案建立完成

Add Properties and Dependency in pom.xml

在pom.xml中添加Spark application所需的Properties與Dependency

Properties

|

|

Dependency

|

|

Sacla Code

新增Scala object,命名為YarnApp,並放置在com.demo的package內

|

|

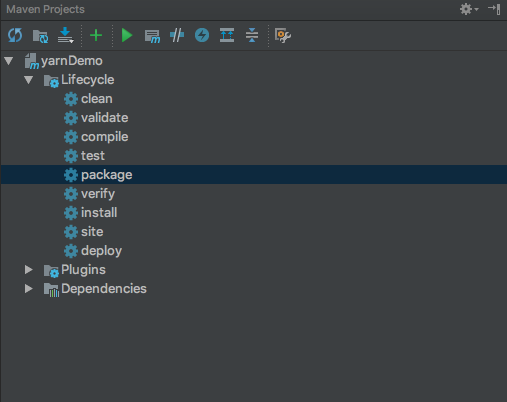

Run Maven Build

雙擊下圖中的Package

IDEA下方會出現對應的Build資訊,若完成會出現對應的Log

|

|

Start YARN

|

|

Submit Spark Application

|

|

Check Application output

|

|