Launching Spark on YARN

Launching YARN

|

|

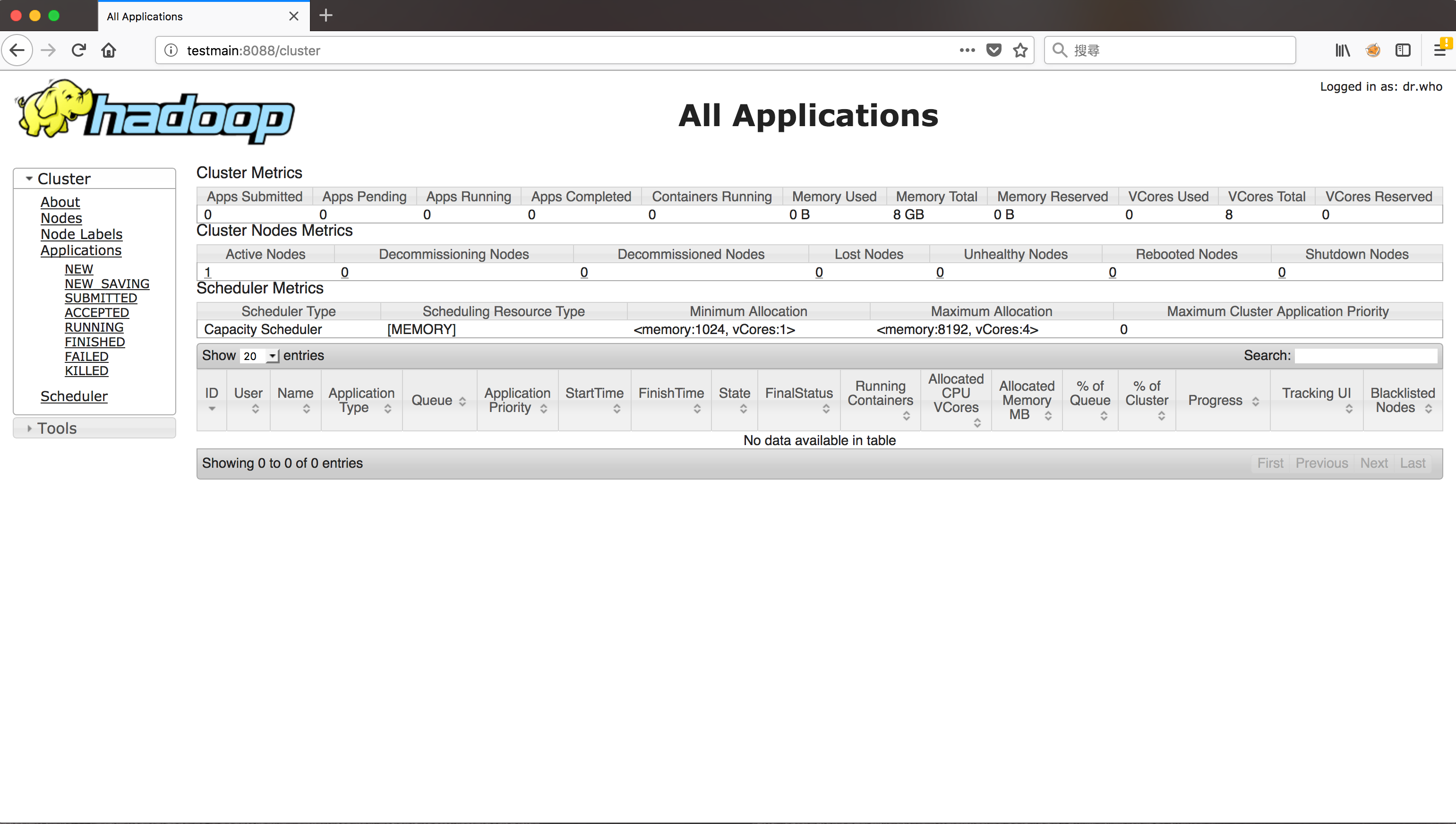

- 訪問8080端口,確認YARN正常運作

Configuration

|

|

Launching Spark on YARN

|

|

Log Explanation

- ui.SparkUI: Bound SparkUI to 0.0.0.0, and started at http://192.168.128.91:4040

- 啟動SparkUI在4040端口上

- spark.SparkContext: Added JAR file:/opt/software/spark/examples/jars/spark-examples_2.11-2.2.0.jar at spark://192.168.128.91:34636/jars/spark-examples_2.11-2.2.0.jar with timestamp 1522255743082

- 開始上傳指定的jar包

- client.RMProxy: Connecting to ResourceManager at /0.0.0.0:8032

- 連接到在8032端口上的ResourceManager

- yarn.Client: Requesting a new application from cluster with 1 NodeManagers

- 請求一個NodeManager,讓Application master使用

- Verifying our application has not requested more than the maximum memory capability of the cluster (8192 MB per container)

- 檢查Application沒有要求超過cluster的最大記憶體容量

- yarn.Client: Will allocate AM container, with 896 MB memory including 384 MB overhead

- 將會為Application master container配置896 MB的記憶體

- yarn.Client: Setting up container launch context for our AM

- 建立Application master container

- yarn.Client: Setting up the launch environment for our AM container

- 建立Application master container的運行環境

- yarn.Client: Preparing resources for our AM container

- 準備Application master container的資源

- yarn.Client: Neither spark.yarn.jars nor spark.yarn.archive is set, falling back to uploading libraries under SPARK_HOME.

- 此為調優點,要將SPARK_HOME/jars下的jar包上傳至HDFS上,減少libraries上傳造成的網路負擔

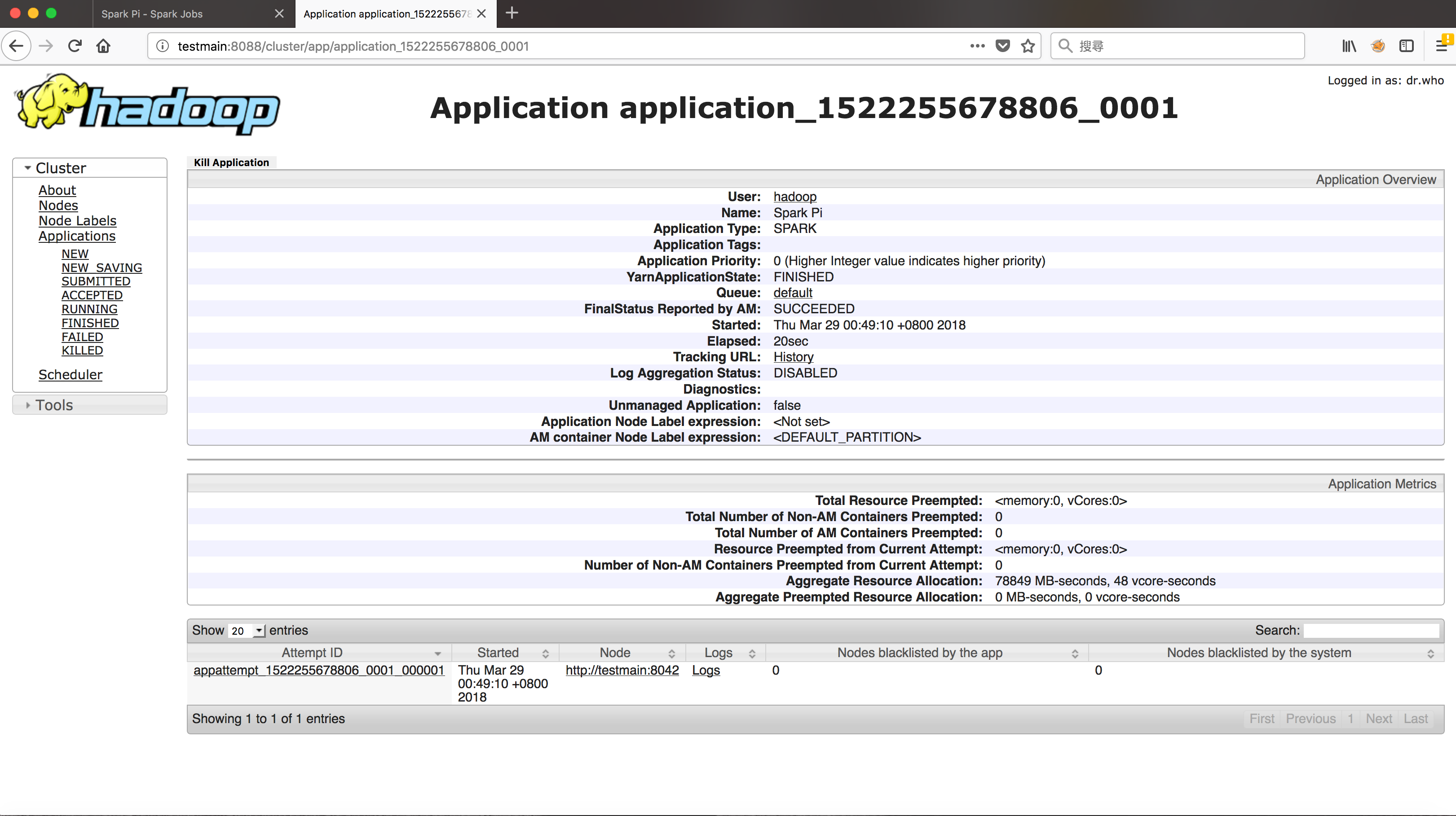

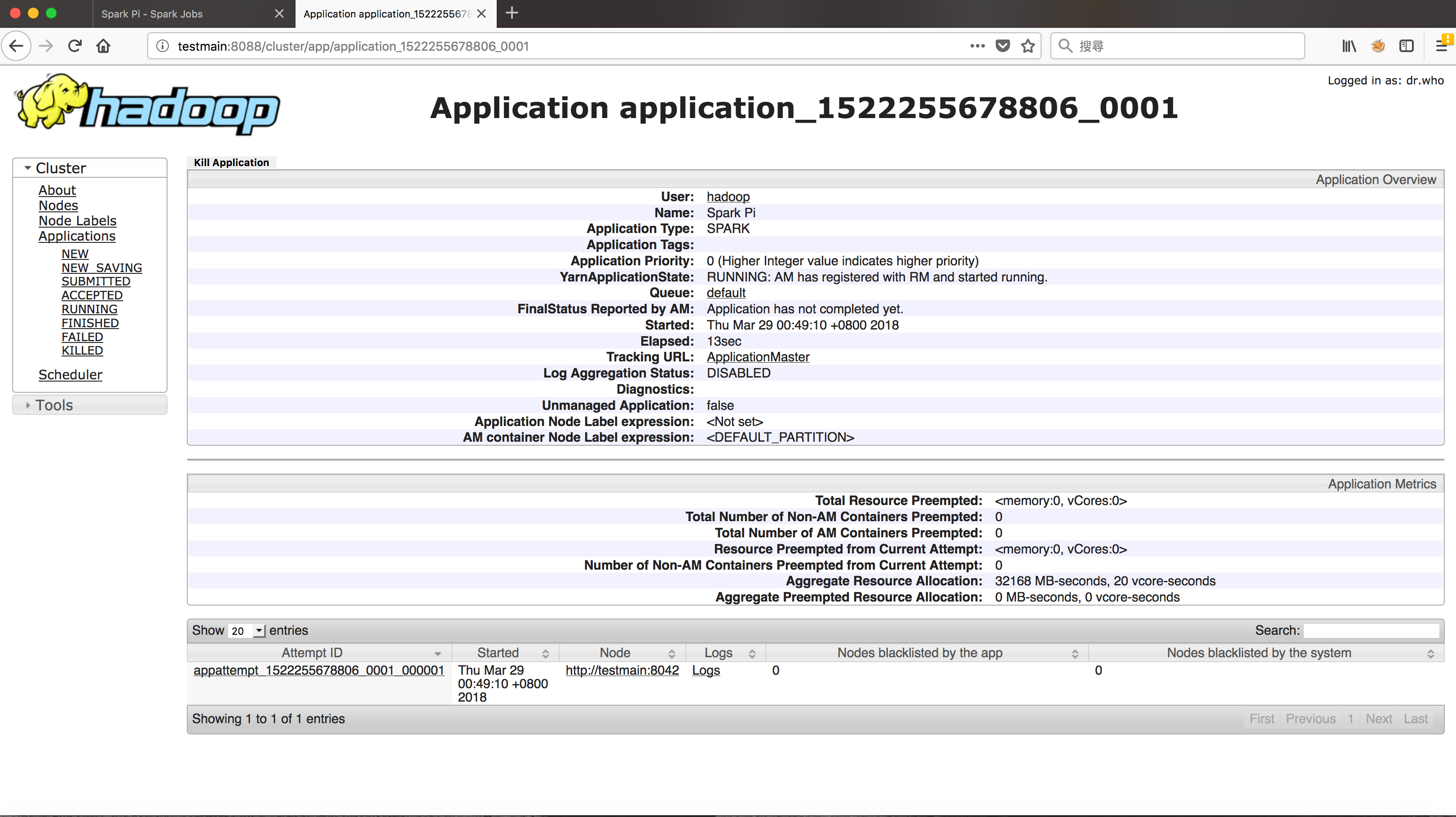

- yarn.Client: Submitting application application_1522255678806_0001 to ResourceManager

- 提交一個Application給ResourceManager,id為application_1522255678806_0001

Web UI

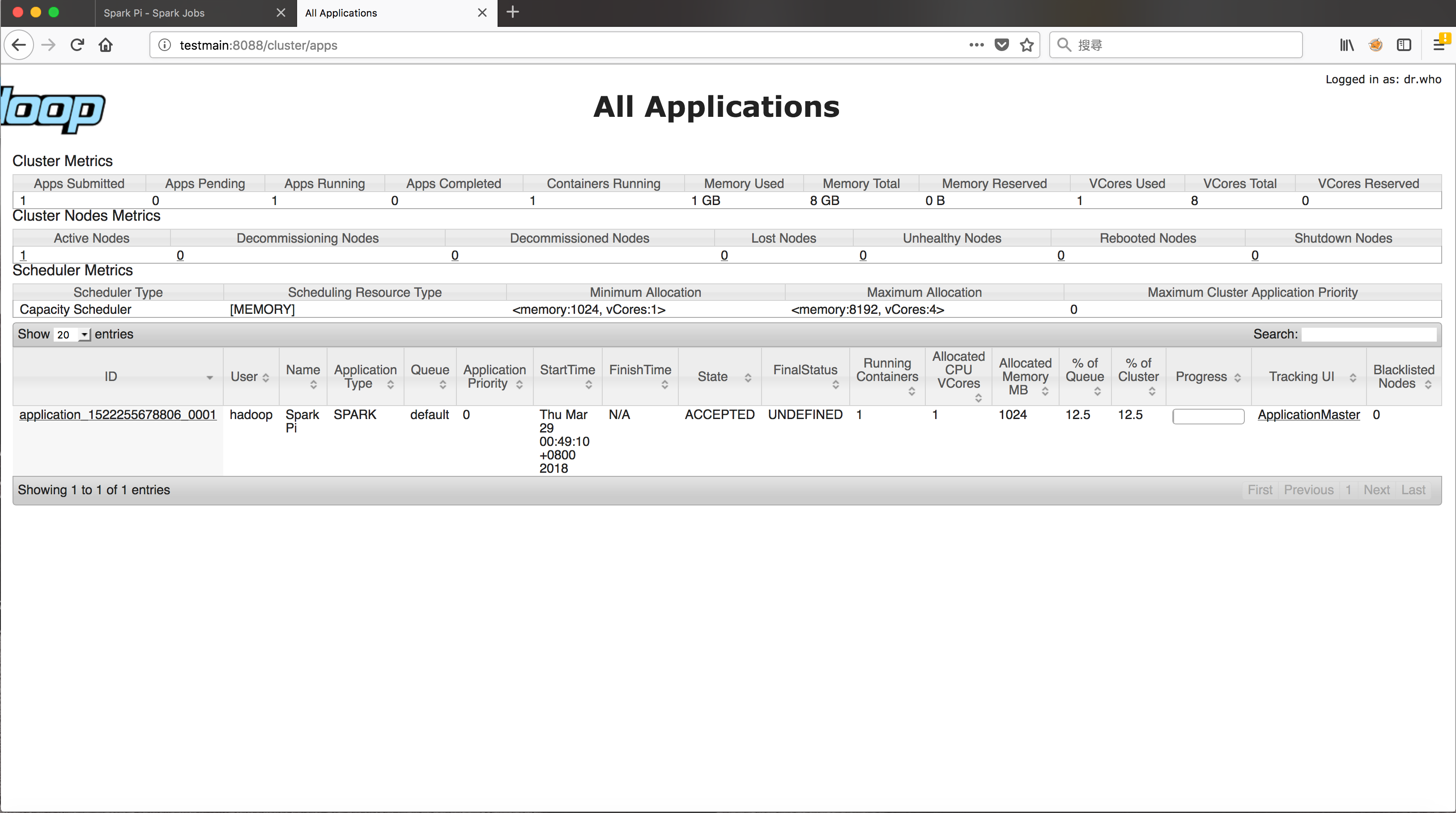

- Spark job提交後,YARN上的All Applications頁面中,對應的Application狀態改變成為Accepted

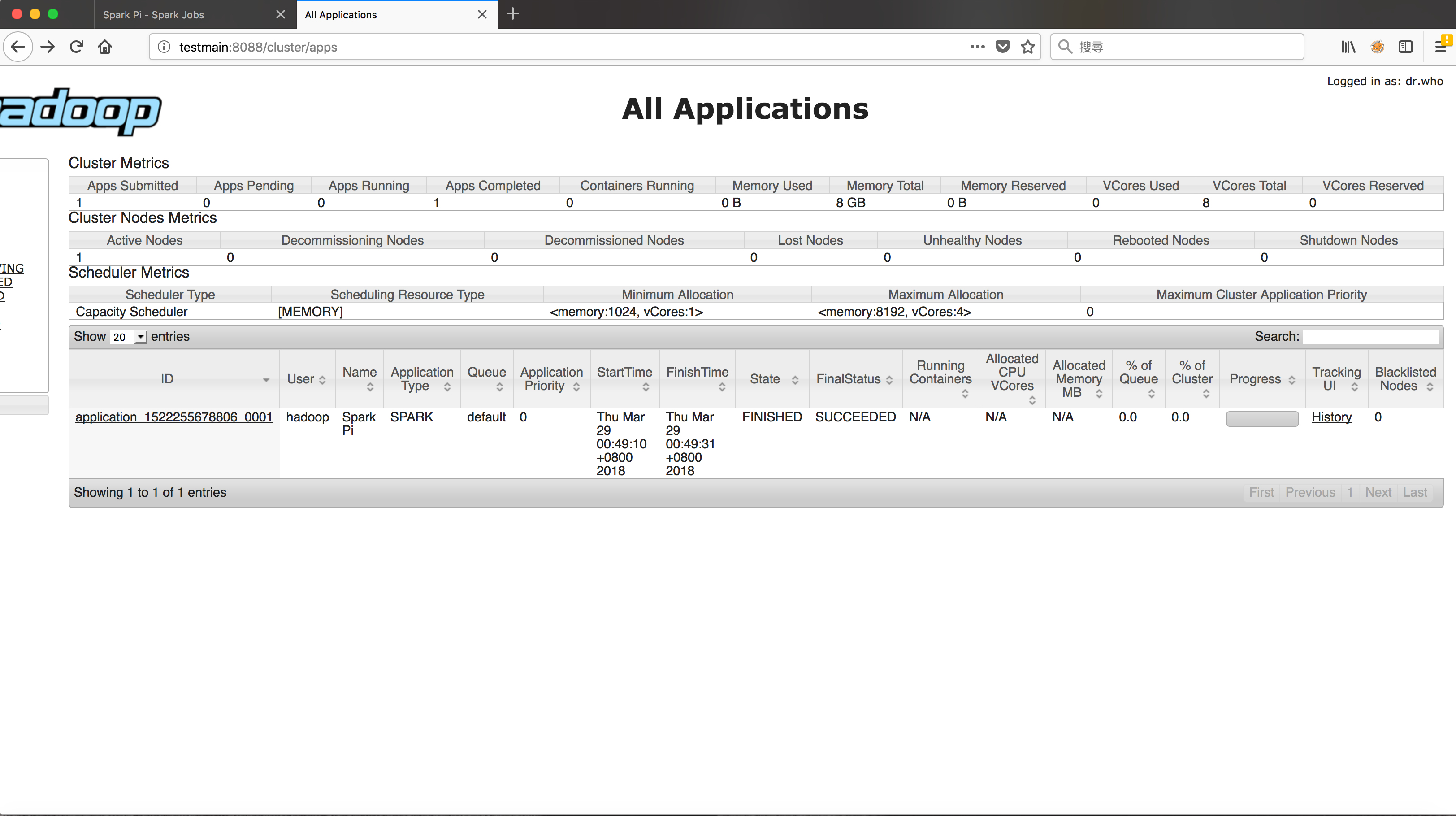

- Spark job完成後,YARN上的All Applications頁面中,對應的Application狀態改變成為finished